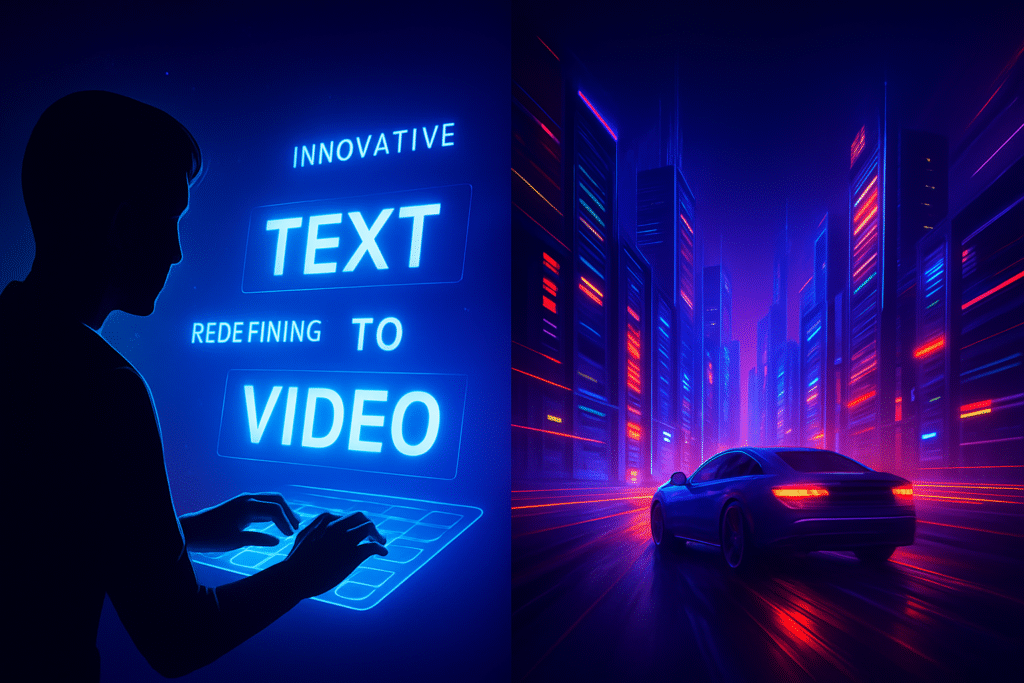

The world of video creation has a powerful new force. It doesn’t rely primarily on traditional production pipelines. It starts with words.

OpenAI, the team that brought us the seismic shift of ChatGPT, has unleashed Sora AI a text-to-video model that is already being hailed as the “GPT moment” for visual content. This isn’t just about generating a short clip; it’s about synthesizing dynamic scenes from simple text prompts. The implications are enormous for entertainment, education, and marketing.

If you create content, consume media, or simply want to understand the next great leap in technology, you need to grasp what Sora AI is doing. As David Ogilvy understood, the Big Idea changes everything and Sora represents the Big Idea for video.

Sora AI: Clarity on the Complex Technology

What exactly is Sora, and why does it represent such a significant advancement? The core innovation lies in how it perceives and generates time.

1. The Model: Diffusion + Transformer

Sora AI doesn’t just create individual realistic images and stitch them together a common limitation in earlier models. It’s built on a fusion of two powerful AI architectures:

Diffusion Model: This is the engine that generates high-fidelity imagery. It starts with a screen of static noise and gradually “denoises” it until a photorealistic scene matching the prompt emerges.

Transformer Architecture: This is key to video coherence. Borrowed from Large Language Models (LLMs), the transformer allows Sora to consider multiple video frames simultaneously. This addresses the significant challenge of temporal inconsistency helping objects and characters maintain greater continuity as they move through frames.

2. The Power of “Patches”

To handle the complexity of video, Sora processes content through an approach conceptually understood as managing spatial and temporal dimensions simultaneously.

Think of a video as layers of information across space and time. Traditional image AI handles 2D spatial information. Sora’s architecture incorporates the temporal dimension, allowing the model to be trained on videos of varying lengths, resolutions, and aspect ratios.

This represents a conceptual framework for understanding how Sora achieves greater temporal coherence, though the specific technical implementation details remain proprietary.

The Impressive Capabilities of Sora AI

The true impact of Sora becomes clear when you see what it can create. It moves beyond simple, short clips to a level of detail that feels genuinely innovative though not without limitations.

A. Photorealism and Improved Scene Coherence

Sora 2 demonstrates significant advances in generating videos that approach realistic footage quality particularly in controlled demo conditions.

Complex Scenery: Promotional examples showcase sweeping cinematic shots of detailed environments like neon-lit Tokyo streets with wet pavement and reflections. Real-world results vary based on prompt complexity and generation parameters.

Enhanced Continuity: The model shows improved ability to maintain characters and objects even when temporarily occluded (hidden) by other elements. However, the experience isn’t uniform many users report artifacts, flickering, distortion, and inconsistent physics across different generations. When it works well, the consistency represents a meaningful step forward compared to earlier text-to-video tools.

Critical reality check: Sora still struggles with complex physics simulations, spatial relationships (like distinguishing left from right), and fine causal details. The technology is impressive but actively evolving, not production-ready for all use cases.

B. Storytelling Potential

This isn’t just a tool for creating backgrounds; it’s opening new doors for narrative exploration.

Sora can generate scenes based on detailed text prompts, maintain visual style across generated sequences, and support multiple creation modes:

- Text-to-Video: Generate entirely new scenes from written descriptions

- Image-to-Video: Animate still images, though results vary significantly some users report limited motion or minimal animation quality in current implementations

- Video Remixing: Use existing clips as creative starting points

C. The New Social Dynamic: The Sora 2 App

OpenAI didn’t just release a model; they launched a platform. The Sora 2 App (currently in limited rollout in the U.S. and Canada, not yet globally available) is a short-form video network where all content is AI-generated.

Cameos: A distinctive feature introduced in Sora 2 that allows you to upload a short video/audio clip of yourself. This creates an AI-powered “cameo” that you can then cast into videos you or your friends generate (with permission). This approach democratizes personalized content creation in unprecedented ways.

Remix Culture: The app encourages users to take existing videos and “Remix” them by adjusting prompts, fostering new forms of creative collaboration.

The Road Ahead: Clarity, Ethics, and Opportunity

As with any powerful new technology, Sora AI introduces significant opportunities alongside serious ethical responsibilities.

Opportunities for the Creator Economy

For creatives, Sora represents a substantial unlock:

Rapid Prototyping: Filmmakers and advertisers can move from concept to high-fidelity storyboard in minutes rather than weeks.

Expanded Creative Possibilities: Independent creators can bring production-value visuals to life without traditional Hollywood budgets. Previously impossible ideas become achievable.

Personalized Marketing: Brands can create hyper-specific, localized, or personalized content that resonates more immediately with target audiences.

The Non-Negotiable Challenge of Ethics

The ability to generate increasingly realistic, persuasive media necessitates clear guardrails.

Trust and Synthetic Media: The challenge of distinguishing AI-generated content from reality will intensify. OpenAI has committed to embedding metadata tags and using content provenance standards like C2PA an industry-standard digital signature to identify content as AI-generated. While watermarking approaches are being implemented, the visibility and consistency of these markers across all outputs continues to evolve.

Consent and Likeness: The Cameo feature is opt-in, ensuring users retain control over their digital likeness. The platform also has policies to prevent generation of unauthorized public figures or harmful content.

Conclusion: The End of Production Scarcity, The Rise of Original Vision

Sora AI is more than a technical achievement; it signals a fundamental shift in the economics of visual content creation. As production costs for synthetic video content decrease dramatically, the currency of the future isn’t technical execution skill it’s originality and unique perspective.

As the cost of execution diminishes, the value of the Big Idea, the compelling prompt, and the distinctly human story increases exponentially. The creator who thinks clearly and tells authentic stories will win.

The meta-lesson? When tools become universally accessible, differentiation comes from what those tools cannot provide: genuine insight, cultural understanding, and emotional truth.

Your Perspective Matters 💡

Discussion prompts:

- Will Sora-style AI become the standard tool for video creation across industries?

- How should creators balance synthetic content with traditional production techniques?

- What safeguards would make you more comfortable with AI-generated video?

Share your thoughts in the comments below! If you’re exploring how emerging technologies reshape media and creativity, subscribe to our newsletter for insights on navigating the AI-powered content landscape.

The Takeaway: Sora 2 doesn’t eliminate the need for creative vision it amplifies it. In a world where anyone can generate video, the most valuable skill becomes knowing what story to tell and why it matters.

Disclaimer: This blog post was generated with the help of artificial intelligence. Readers are encouraged to verify facts independently.